Lionel Messi And Quickobook: What A Football Legend Teaches Us About Healthcare Access In India

Introduction Lionel Messi is known across the world for his football skills, discipline, and consistency. From a young boy with a growth hormone deficiency to one of the greatest footballers of all time, his journey teaches us powerful life lessons. These lessons go beyond sports. They apply equally to health, self-care, and access to the right support at the right time. In India, millions of people struggle not because treatment does not exist, but because healthcare access is delayed or confusing. This is where Quickobook steps in. Just like Messi focuses on preparation, routine, and the right guidance, Quickobook focuses on connecting patients to the right doctors using technology in healthcare. This blog explores how the values behind Lionel Messi align with the mission of Quickobook, and why these lessons matter for everyday health decisions. Why Compare Lionel Messi and Quickobook? At first glance, football and healthcare may seem unrelated. But if we look deeper, both rely on preparation, teamwork, discipline, and timely decisions. Messi succeeded because: He received the right medical care early He followed strict routines He trusted experts He stayed consistent Quickobook works on the same principles: Early access to doctors Easy appointment booking Trust and transparency Consistent, patient-first care Both show us that success, whether in sports or health, depends on access, discipline, and the right system. Lionel Messi’s Health Journey: A Lesson in Early Medical Care As a child, Lionel Messi was diagnosed with growth hormone deficiency. This condition affects height and physical development. Without treatment, his football career might never have started. His family ensured: Early diagnosis Specialist consultation Long-term treatment Consistent follow-ups This story highlights one clear truth. Timely healthcare access can change lives. In India, many children and adults face delays in diagnosis due to lack of awareness or difficulty finding doctors. Platforms like Quickobook help reduce these delays by making doctor discovery simple and fast. Healthcare Access in India: The Real Challenge India has skilled doctors and advanced hospitals. The real problem is not availability, but access. Common issues include: Long waiting times Confusion about which doctor to consult Distance to clinics Lack of digital booking options Missed follow-ups Just like a footballer cannot perform without the right support team, patients struggle without a clear healthcare pathway. Quickobook: Simplifying Healthcare with Technology Quickobook is designed to solve every day healthcare problems using technology in healthcare. It acts as a bridge between patients and doctors. Key benefits include: Easy online doctor search Appointment booking in minutes Verified doctor profiles Reduced waiting time Better follow-up care For patients, this means less stress. For doctors, it means better patient management. This balance reflects the teamwork seen in professional sports like football. Discipline and Routine: Messi’s Biggest Strength Lionel Messi is not just talented. He is disciplined. His routine includes: Regular health check-ups Fitness monitoring Injury prevention Mental health focus Rest and recovery These habits are equally important for non-athletes. Regular health check-ups help detect problems early. Quickobook encourages preventive healthcare by making routine doctor visits easier to schedule. Preventive Healthcare: What Messi Teaches Every Indian Family Preventive care means seeing a doctor before a problem becomes serious. Messi’s career longevity proves its value. Preventive healthcare includes: Annual health check-ups Monitoring blood pressure and sugar Early screening tests Vaccinations Lifestyle counselling Quickobook supports this approach by helping families find general physicians and specialists nearby, without long delays. Technology in Healthcare: Changing How India Sees Doctors Just like modern football uses data, fitness tracking, and video analysis, modern healthcare uses digital tools. Technology in healthcare helps with: Online appointment booking Teleconsultation Digital health records Reminders for follow-ups Better doctor-patient communication Quickobook uses these tools to make healthcare less complicated and more human. Mental Health: An Often Ignored Lesson from Sports Top athletes work with psychologists to manage stress, pressure, and expectations. Messi is no exception. In daily life, mental health is often ignored due to stigma or lack of access. Quickobook helps people find mental health professionals discreetly and easily, promoting balanced well-being. Why Trust Matters in Both Football and Healthcare Messi trusts his coaches, doctors, and physiotherapists. This trust allows him to focus on performance. Similarly, patients need: Verified doctors Transparent information Honest reviews Clear communication Quickobook builds trust by listing verified doctors and patient feedback, helping users make informed choices. From Stadiums to Clinics: The Same Winning Formula The same formula that builds champions also builds healthy communities: Early action Expert guidance Consistency Discipline Right use of technology Quickobook applies this formula to Indian healthcare, inspired by stories like Lionel Messi’s. When to See a Doctor: Don’t Delay You should book a doctor appointment if you have: Persistent pain Fever lasting more than 3 days Sudden weight loss Breathing difficulty Mental stress or anxiety Chronic lifestyle conditions Early consultation saves money, time, and health. Risks of Delayed Healthcare Ignoring symptoms can lead to: Complications Longer treatment Higher medical costs Hospitalisation Permanent damage Messi’s early treatment prevented long-term problems. The same principle applies to everyone. Lifestyle Lessons from Lionel Messi Messi’s lifestyle focuses on balance: Healthy diet Regular exercise Proper sleep Stress management Doctors available on Quickobook can help guide patients toward healthier routines suited to Indian lifestyles. Conclusion Lionel Messi’s life proves that talent alone is not enough. Timely medical care, discipline, and access to experts play a key role in long-term success. In the same way, Quickobook is changing how Indians approach healthcare by improving healthcare access through technology in healthcare. Whether you are managing a condition or simply staying healthy, the right doctor at the right time can make all the difference. Quickobook Call to Action Book verified doctors near you Avoid long waiting lines Manage your health digitally Take control of your well-being today with Quickobook Frequently Asked Questions Q1. Who is Lionel Messi? A. Lionel Messi is a world-famous footballer known for skill, discipline, and consistency. Q2. What health issue did Messi face as a child? A. He had growth hormone deficiency, treated with early medical care. Q3. Why is Messi’s story relevant to healthcare? A. It shows how early access to treatment can change lives. Q4. What is Quickobook? A. Quickobook is a digital platform to book doctor appointments easily. Q5. How does Quickobook improve healthcare access? A. It connects patients with doctors quickly using technology. Q6. Is Quickobook available in India? A. Yes, it is designed for Indian healthcare needs. Q7. Can I book general physicians on Quickobook? A. Yes, you can book general and specialist doctors. Q8. Does Quickobook reduce waiting time? A. Yes, it helps schedule appointments in advance. Q9. What is preventive healthcare? A. It means seeing doctors early to avoid serious illness. Q10. Why is preventive care important? A. It reduces complications and healthcare costs. Q11. How does technology help healthcare? A. It simplifies booking, follow-ups, and communication. Q12. Can Quickobook help with mental health? A. Yes, mental health professionals are available. Q13. Is Quickobook suitable for families? A. Yes, it supports healthcare for all age groups. Q14. Does Messi focus on mental fitness? A. Yes, mental strength is key in professional sports. Q15. Can regular check-ups prevent disease? A. Yes, many conditions are manageable if detected early. Q16. Is online doctor booking safe? A. Yes, when using verified platforms like Quickobook. Q17. How often should adults see a doctor? A. At least once a year for routine check-ups. Q18. What conditions need immediate consultation? A. Chest pain, breathlessness, and severe fever. Q19. Can lifestyle changes improve health? A. Yes, diet, exercise, and sleep make a big difference. Q20. Does Quickobook support follow-ups? A. Yes, it helps maintain continuity of care. Q21. Why is trust important in healthcare? A. It improves treatment outcomes and patient comfort. Q22. How does Quickobook verify doctors? A. Through qualification and registration checks. Q23. Can rural patients use Quickobook? A. Yes, availability depends on nearby doctors. Q24. Does Quickobook offer teleconsultation? A. Many doctors provide online consultations. Q25. What lesson does Messi teach about discipline? A. Consistency leads to long-term success. Q26. Is delayed treatment risky? A. Yes, it can worsen health conditions. Q27. Can Quickobook help with chronic diseases? A. Yes, it helps manage regular doctor visits. Q28. Why is early diagnosis important? A. It prevents complications and improves outcomes. Q29. Does Quickobook save time? A. Yes, by reducing clinic waiting hours. Q30. Is healthcare access a problem in India? A. Yes, especially due to delays and lack of awareness. Q31. Can technology replace doctors? A. No, it supports doctors, not replaces them. Q32. Does Messi follow a strict routine? A. Yes, for fitness and injury prevention. Q33. Can routine help non-athletes? A. Yes, routines improve overall health. Q34. Are regular tests necessary? A. Yes, for early detection of health issues. Q35. Does Quickobook list specialists? A. Yes, across multiple medical fields. Q36. Is Quickobook user-friendly? A. Yes, it is designed for easy use. Q37. Can older adults use Quickobook? A. Yes, with simple booking options. Q38. What role does awareness play in health? A. It helps people seek care early. Q39. Does Messi’s success rely only on talent? A. No, it also relies on medical and professional support. Q40. Can Quickobook help in emergencies? A. It helps find doctors quickly, but emergencies need hospitals. Q41. Is digital healthcare the future? A. Yes, it improves efficiency and access. Q42. Does Quickobook show doctor reviews? A. Yes, patient feedback is available. Q43. Can booking online reduce stress? A. Yes, it avoids uncertainty and delays. Q44. Are check-ups expensive? A. Costs vary, but prevention saves money long-term. Q45. Can lifestyle counselling help? A. Yes, doctors can guide healthy habits. Q46. Does Quickobook support preventive care? A. Yes, by encouraging regular visits. Q47. What is the biggest healthcare lesson from Messi? A. Never delay proper medical care. Q48. Is healthcare a team effort? A. Yes, between patient, doctor, and system. Q49. Can one platform improve healthcare habits? A. Yes, by making access easy and reliable. Q50. How can I start using Quickobook? A. By booking your first doctor appointment online. Introduction Lionel Messi is known across the world for his football skills, discipline, and consistency. From a young boy with a growth hormone deficiency to one of the greatest footballers of all time, his journey teaches us powerful life lessons. These lessons go beyond sports. They apply equally to health, self-care, and access to the right support at the right time. In India, millions of people struggle not because treatment does not exist, but because healthcare access is delayed or confusing. This is where Quickobook steps in. Just like Messi focuses on preparation, routine, and the right guidance, Quickobook focuses on connecting patients to the right doctors using technology in healthcare. This blog explores how the values behind Lionel Messi align with the mission of Quickobook, and why these lessons matter for everyday health decisions. Why Compare Lionel Messi and Quickobook? At first glance, football and healthcare may seem unrelated. But if we look deeper, both rely on preparation, teamwork, discipline, and timely decisions. Messi succeeded because: He received the right medical care early He followed strict routines He trusted experts He stayed consistent Quickobook works on the same principles: Early access to doctors Easy appointment booking Trust and transparency Consistent, patient-first care Both show us that success, whether in sports or health, depends on access, discipline, and the right system. Lionel Messi’s Health Journey: A Lesson in Early Medical Care As a child, Lionel Messi was diagnosed with growth hormone deficiency. This condition affects height and physical development. Without treatment, his football career might never have started. His family ensured: Early diagnosis Specialist consultation Long-term treatment Consistent follow-ups This story highlights one clear truth. Timely healthcare access can change lives. In India, many children and adults face delays in diagnosis due to lack of awareness or difficulty finding doctors. Platforms like Quickobook help reduce these delays by making doctor discovery simple and fast. Healthcare Access in India: The Real Challenge India has skilled doctors and advanced hospitals. The real problem is not availability, but access. Common issues include: Long waiting times Confusion about which doctor to consult Distance to clinics Lack of digital booking options Missed follow-ups Just like a footballer cannot perform without the right support team, patients struggle without a clear healthcare pathway. Quickobook: Simplifying Healthcare with Technology Quickobook is designed to solve every day healthcare problems using technology in healthcare. It acts as a bridge between patients and doctors. Key benefits include: Easy online doctor search Appointment booking in minutes Verified doctor profiles Reduced waiting time Better follow-up care For patients, this means less stress. For doctors, it means better patient management. This balance reflects the teamwork seen in professional sports like football. Discipline and Routine: Messi’s Biggest Strength Lionel Messi is not just talented. He is disciplined. His routine includes: Regular health check-ups Fitness monitoring Injury prevention Mental health focus Rest and recovery These habits are equally important for non-athletes. Regular health check-ups help detect problems early. Quickobook encourages preventive healthcare by making routine doctor visits easier to schedule. Preventive Healthcare: What Messi Teaches Every Indian Family Preventive care means seeing a doctor before a problem becomes serious. Messi’s career longevity proves its value. Preventive healthcare includes: Annual health check-ups Monitoring blood pressure and sugar Early screening tests Vaccinations Lifestyle counselling Quickobook supports this approach by helping families find general physicians and specialists nearby, without long delays. Technology in Healthcare: Changing How India Sees Doctors Just like modern football uses data, fitness tracking, and video analysis, modern healthcare uses digital tools. Technology in healthcare helps with: Online appointment booking Teleconsultation Digital health records Reminders for follow-ups Better doctor-patient communication Quickobook uses these tools to make healthcare less complicated and more human. Mental Health: An Often Ignored Lesson from Sports Top athletes work with psychologists to manage stress, pressure, and expectations. Messi is no exception. In daily life, mental health is often ignored due to stigma or lack of access. Quickobook helps people find mental health professionals discreetly and easily, promoting balanced well-being. Why Trust Matters in Both Football and Healthcare Messi trusts his coaches, doctors, and physiotherapists. This trust allows him to focus on performance. Similarly, patients need: Verified doctors Transparent information Honest reviews Clear communication Quickobook builds trust by listing verified doctors and patient feedback, helping users make informed choices. From Stadiums to Clinics: The Same Winning Formula The same formula that builds champions also builds healthy communities: Early action Expert guidance Consistency Discipline Right use of technology Quickobook applies this formula to Indian healthcare, inspired by stories like Lionel Messi’s. When to See a Doctor: Don’t Delay You should book a doctor appointment if you have: Persistent pain Fever lasting more than 3 days Sudden weight loss Breathing difficulty Mental stress or anxiety Chronic lifestyle conditions Early consultation saves money, time, and health. Risks of Delayed Healthcare Ignoring symptoms can lead to: Complications Longer treatment Higher medical costs Hospitalisation Permanent damage Messi’s early treatment prevented long-term problems. The same principle applies to everyone. Lifestyle Lessons from Lionel Messi Messi’s lifestyle focuses on balance: Healthy diet Regular exercise Proper sleep Stress management Doctors available on Quickobook can help guide patients toward healthier routines suited to Indian lifestyles. Conclusion Lionel Messi’s life proves that talent alone is not enough. Timely medical care, discipline, and access to experts play a key role in long-term success. In the same way, Quickobook is changing how Indians approach healthcare by improving healthcare access through technology in healthcare. Whether you are managing a condition or simply staying healthy, the right doctor at the right time can make all the difference. Quickobook Call to Action Book verified doctors near you Avoid long waiting lines Manage your health digitally Take control of your well-being today with Quickobook Frequently Asked Questions Q1. Who is Lionel Messi? A. Lionel Messi is a world-famous footballer known for skill, discipline, and consistency. Q2. What health issue did Messi face as a child? A. He had growth hormone deficiency, treated with early medical care. Q3. Why is Messi’s story relevant to healthcare? A. It shows how early access to treatment can change lives. Q4. What is Quickobook? A. Quickobook is a digital platform to book doctor appointments easily. Q5. How does Quickobook improve healthcare access? A. It connects patients with doctors quickly using technology. Q6. Is Quickobook available in India? A. Yes, it is designed for Indian healthcare needs. Q7. Can I book general physicians on Quickobook? A. Yes, you can book general and specialist doctors. Q8. Does Quickobook reduce waiting time? A. Yes, it helps schedule appointments in advance. Q9. What is preventive healthcare? A. It means seeing doctors early to avoid serious illness. Q10. Why is preventive care important? A. It reduces complications and healthcare costs. Q11. How does technology help healthcare? A. It simplifies booking, follow-ups, and communication. Q12. Can Quickobook help with mental health? A. Yes, mental health professionals are available. Q13. Is Quickobook suitable for families? A. Yes, it supports healthcare for all age groups. Q14. Does Messi focus on mental fitness? A. Yes, mental strength is key in professional sports. Q15. Can regular check-ups prevent disease? A. Yes, many conditions are manageable if detected early. Q16. Is online doctor booking safe? A. Yes, when using verified platforms like Quickobook. Q17. How often should adults see a doctor? A. At least once a year for routine check-ups. Q18. What conditions need immediate consultation? A. Chest pain, breathlessness, and severe fever. Q19. Can lifestyle changes improve health? A. Yes, diet, exercise, and sleep make a big difference. Q20. Does Quickobook support follow-ups? A. Yes, it helps maintain continuity of care. Q21. Why is trust important in healthcare? A. It improves treatment outcomes and patient comfort. Q22. How does Quickobook verify doctors? A. Through qualification and registration checks. Q23. Can rural patients use Quickobook? A. Yes, availability depends on nearby doctors. Q24. Does Quickobook offer teleconsultation? A. Many doctors provide online consultations. Q25. What lesson does Messi teach about discipline? A. Consistency leads to long-term success. Q26. Is delayed treatment risky? A. Yes, it can worsen health conditions. Q27. Can Quickobook help with chronic diseases? A. Yes, it helps manage regular doctor visits. Q28. Why is early diagnosis important? A. It prevents complications and improves outcomes. Q29. Does Quickobook save time? A. Yes, by reducing clinic waiting hours. Q30. Is healthcare access a problem in India? A. Yes, especially due to delays and lack of awareness. Q31. Can technology replace doctors? A. No, it supports doctors, not replaces them. Q32. Does Messi follow a strict routine? A. Yes, for fitness and injury prevention. Q33. Can routine help non-athletes? A. Yes, routines improve overall health. Q34. Are regular tests necessary? A. Yes, for early detection of health issues. Q35. Does Quickobook list specialists? A. Yes, across multiple medical fields. Q36. Is Quickobook user-friendly? A. Yes, it is designed for easy use. Q37. Can older adults use Quickobook? A. Yes, with simple booking options. Q38. What role does awareness play in health? A. It helps people seek care early. Q39. Does Messi’s success rely only on talent? A. No, it also relies on medical and professional support. Q40. Can Quickobook help in emergencies? A. It helps find doctors quickly, but emergencies need hospitals. Q41. Is digital healthcare the future? A. Yes, it improves efficiency and access. Q42. Does Quickobook show doctor reviews? A. Yes, patient feedback is available. Q43. Can booking online reduce stress? A. Yes, it avoids uncertainty and delays. Q44. Are check-ups expensive? A. Costs vary, but prevention saves money long-term. Q45. Can lifestyle counselling help? A. Yes, doctors can guide healthy habits. Q46. Does Quickobook support preventive care? A. Yes, by encouraging regular visits. Q47. What is the biggest healthcare lesson from Messi? A. Never delay proper medical care. Q48. Is healthcare a team effort? A. Yes, between patient, doctor, and system. Q49. Can one platform improve healthcare habits? A. Yes, by making access easy and reliable. Q50. How can I start using Quickobook? A. By booking your first doctor appointment online. Introduction Lionel Messi is known across the world for his football skills, discipline, and consistency. From a young boy with a growth hormone deficiency to one of the greatest footballers of all time, his journey teaches us powerful life lessons. These lessons go beyond sports. They apply equally to health, self-care, and access to the right support at the right time. In India, millions of people struggle not because treatment does not exist, but because healthcare access is delayed or confusing. This is where Quickobook steps in. Just like Messi focuses on preparation, routine, and the right guidance, Quickobook focuses on connecting patients to the right doctors using technology in healthcare. This blog explores how the values behind Lionel Messi align with the mission of Quickobook, and why these lessons matter for everyday health decisions. Why Compare Lionel Messi and Quickobook? At first glance, football and healthcare may seem unrelated. But if we look deeper, both rely on preparation, teamwork, discipline, and timely decisions. Messi succeeded because: He received the right medical care early He followed strict routines He trusted experts He stayed consistent Quickobook works on the same principles: Early access to doctors Easy appointment booking Trust and transparency Consistent, patient-first care Both show us that success, whether in sports or health, depends on access, discipline, and the right system. Lionel Messi’s Health Journey: A Lesson in Early Medical Care As a child, Lionel Messi was diagnosed with growth hormone deficiency. This condition affects height and physical development. Without treatment, his football career might never have started. His family ensured: Early diagnosis Specialist consultation Long-term treatment Consistent follow-ups This story highlights one clear truth. Timely healthcare access can change lives. In India, many children and adults face delays in diagnosis due to lack of awareness or difficulty finding doctors. Platforms like Quickobook help reduce these delays by making doctor discovery simple and fast. Healthcare Access in India: The Real Challenge India has skilled doctors and advanced hospitals. The real problem is not availability, but access. Common issues include: Long waiting times Confusion about which doctor to consult Distance to clinics Lack of digital booking options Missed follow-ups Just like a footballer cannot perform without the right support team, patients struggle without a clear healthcare pathway. Quickobook: Simplifying Healthcare with Technology Quickobook is designed to solve every day healthcare problems using technology in healthcare. It acts as a bridge between patients and doctors. Key benefits include: Easy online doctor search Appointment booking in minutes Verified doctor profiles Reduced waiting time Better follow-up care For patients, this means less stress. For doctors, it means better patient management. This balance reflects the teamwork seen in professional sports like football. Discipline and Routine: Messi’s Biggest Strength Lionel Messi is not just talented. He is disciplined. His routine includes: Regular health check-ups Fitness monitoring Injury prevention Mental health focus Rest and recovery These habits are equally important for non-athletes. Regular health check-ups help detect problems early. Quickobook encourages preventive healthcare by making routine doctor visits easier to schedule. Preventive Healthcare: What Messi Teaches Every Indian Family Preventive care means seeing a doctor before a problem becomes serious. Messi’s career longevity proves its value. Preventive healthcare includes: Annual health check-ups Monitoring blood pressure and sugar Early screening tests Vaccinations Lifestyle counselling Quickobook supports this approach by helping families find general physicians and specialists nearby, without long delays. Technology in Healthcare: Changing How India Sees Doctors Just like modern football uses data, fitness tracking, and video analysis, modern healthcare uses digital tools. Technology in healthcare helps with: Online appointment booking Teleconsultation Digital health records Reminders for follow-ups Better doctor-patient communication Quickobook uses these tools to make healthcare less complicated and more human. Mental Health: An Often Ignored Lesson from Sports Top athletes work with psychologists to manage stress, pressure, and expectations. Messi is no exception. In daily life, mental health is often ignored due to stigma or lack of access. Quickobook helps people find mental health professionals discreetly and easily, promoting balanced well-being. Why Trust Matters in Both Football and Healthcare Messi trusts his coaches, doctors, and physiotherapists. This trust allows him to focus on performance. Similarly, patients need: Verified doctors Transparent information Honest reviews Clear communication Quickobook builds trust by listing verified doctors and patient feedback, helping users make informed choices. From Stadiums to Clinics: The Same Winning Formula The same formula that builds champions also builds healthy communities: Early action Expert guidance Consistency Discipline Right use of technology Quickobook applies this formula to Indian healthcare, inspired by stories like Lionel Messi’s. When to See a Doctor: Don’t Delay You should book a doctor appointment if you have: Persistent pain Fever lasting more than 3 days Sudden weight loss Breathing difficulty Mental stress or anxiety Chronic lifestyle conditions Early consultation saves money, time, and health. Risks of Delayed Healthcare Ignoring symptoms can lead to: Complications Longer treatment Higher medical costs Hospitalisation Permanent damage Messi’s early treatment prevented long-term problems. The same principle applies to everyone. Lifestyle Lessons from Lionel Messi Messi’s lifestyle focuses on balance: Healthy diet Regular exercise Proper sleep Stress management Doctors available on Quickobook can help guide patients toward healthier routines suited to Indian lifestyles. Conclusion Lionel Messi’s life proves that talent alone is not enough. Timely medical care, discipline, and access to experts play a key role in long-term success. In the same way, Quickobook is changing how Indians approach healthcare by improving healthcare access through technology in healthcare. Whether you are managing a condition or simply staying healthy, the right doctor at the right time can make all the difference. Quickobook Call to Action Book verified doctors near you Avoid long waiting lines Manage your health digitally Take control of your well-being today with Quickobook Frequently Asked Questions Q1. Who is Lionel Messi? A. Lionel Messi is a world-famous footballer known for skill, discipline, and consistency. Q2. What health issue did Messi face as a child? A. He had growth hormone deficiency, treated with early medical care. Q3. Why is Messi’s story relevant to healthcare? A. It shows how early access to treatment can change lives. Q4. What is Quickobook? A. Quickobook is a digital platform to book doctor appointments easily. Q5. How does Quickobook improve healthcare access? A. It connects patients with doctors quickly using technology. Q6. Is Quickobook available in India? A. Yes, it is designed for Indian healthcare needs. Q7. Can I book general physicians on Quickobook? A. Yes, you can book general and specialist doctors. Q8. Does Quickobook reduce waiting time? A. Yes, it helps schedule appointments in advance. Q9. What is preventive healthcare? A. It means seeing doctors early to avoid serious illness. Q10. Why is preventive care important? A. It reduces complications and healthcare costs. Q11. How does technology help healthcare? A. It simplifies booking, follow-ups, and communication. Q12. Can Quickobook help with mental health? A. Yes, mental health professionals are available. Q13. Is Quickobook suitable for families? A. Yes, it supports healthcare for all age groups. Q14. Does Messi focus on mental fitness? A. Yes, mental strength is key in professional sports. Q15. Can regular check-ups prevent disease? A. Yes, many conditions are manageable if detected early. Q16. Is online doctor booking safe? A. Yes, when using verified platforms like Quickobook. Q17. How often should adults see a doctor? A. At least once a year for routine check-ups. Q18. What conditions need immediate consultation? A. Chest pain, breathlessness, and severe fever. Q19. Can lifestyle changes improve health? A. Yes, diet, exercise, and sleep make a big difference. Q20. Does Quickobook support follow-ups? A. Yes, it helps maintain continuity of care. Q21. Why is trust important in healthcare? A. It improves treatment outcomes and patient comfort. Q22. How does Quickobook verify doctors? A. Through qualification and registration checks. Q23. Can rural patients use Quickobook? A. Yes, availability depends on nearby doctors. Q24. Does Quickobook offer teleconsultation? A. Many doctors provide online consultations. Q25. What lesson does Messi teach about discipline? A. Consistency leads to long-term success. Q26. Is delayed treatment risky? A. Yes, it can worsen health conditions. Q27. Can Quickobook help with chronic diseases? A. Yes, it helps manage regular doctor visits. Q28. Why is early diagnosis important? A. It prevents complications and improves outcomes. Q29. Does Quickobook save time? A. Yes, by reducing clinic waiting hours. Q30. Is healthcare access a problem in India? A. Yes, especially due to delays and lack of awareness. Q31. Can technology replace doctors? A. No, it supports doctors, not replaces them. Q32. Does Messi follow a strict routine? A. Yes, for fitness and injury prevention. Q33. Can routine help non-athletes? A. Yes, routines improve overall health. Q34. Are regular tests necessary? A. Yes, for early detection of health issues. Q35. Does Quickobook list specialists? A. Yes, across multiple medical fields. Q36. Is Quickobook user-friendly? A. Yes, it is designed for easy use. Q37. Can older adults use Quickobook? A. Yes, with simple booking options. Q38. What role does awareness play in health? A. It helps people seek care early. Q39. Does Messi’s success rely only on talent? A. No, it also relies on medical and professional support. Q40. Can Quickobook help in emergencies? A. It helps find doctors quickly, but emergencies need hospitals. Q41. Is digital healthcare the future? A. Yes, it improves efficiency and access. Q42. Does Quickobook show doctor reviews? A. Yes, patient feedback is available. Q43. Can booking online reduce stress? A. Yes, it avoids uncertainty and delays. Q44. Are check-ups expensive? A. Costs vary, but prevention saves money long-term. Q45. Can lifestyle counselling help? A. Yes, doctors can guide healthy habits. Q46. Does Quickobook support preventive care? A. Yes, by encouraging regular visits. Q47. What is the biggest healthcare lesson from Messi? A. Never delay proper medical care. Q48. Is healthcare a team effort? A. Yes, between patient, doctor, and system. Q49. Can one platform improve healthcare habits? A. Yes, by making access easy and reliable. Q50. How can I start using Quickobook? A. By booking your first doctor appointment online.

Read More

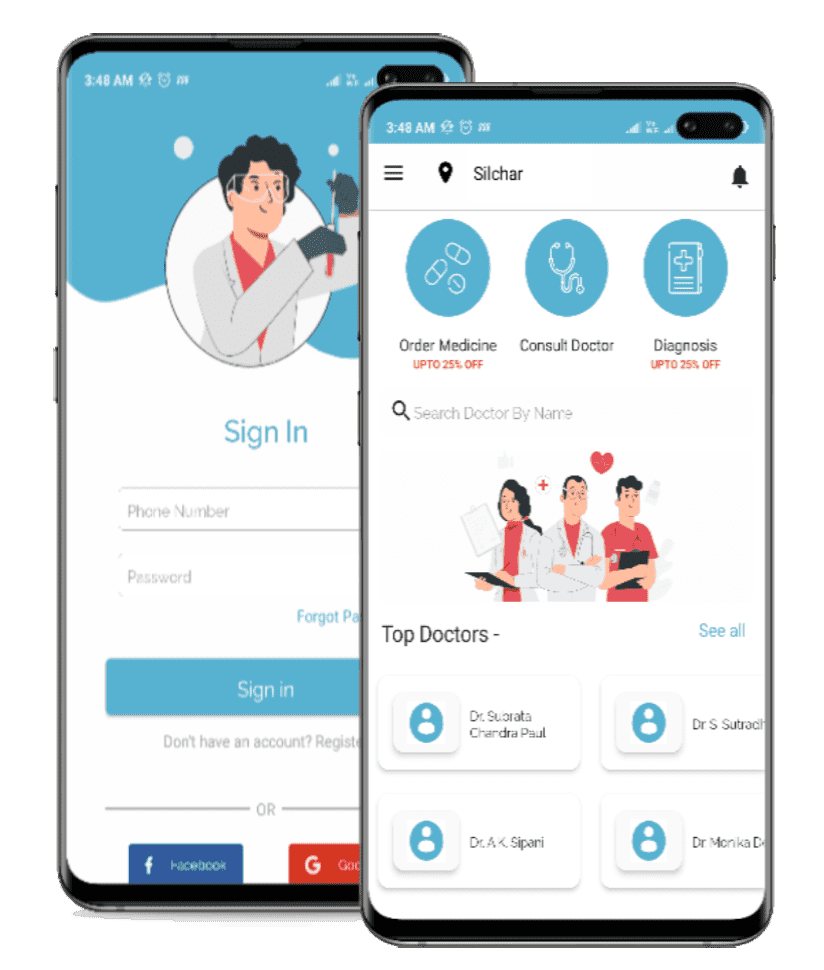

Play Store

Play Store